4 Identifying Distinct Subpopulations

Brief Chapter Summary

Subgroup discovery algorithms often generate redundant descriptions of subpopulations. We present a workflow to extract a small number of representative rules from a large set of classification rules. These so-called proxy rules minimize instance overlaps across rule groups, thus covering different subpopulations. We evaluate our workflow on SHIP data samples with hepatic steatosis and goiter as target variables, respectively.

This chapter is partly based on:

Uli Niemann, Myra Spiliopoulou, Bernhard Preim, Till Ittermann, and Henry Völzke. “Combining Subgroup Discovery and Clustering to Identify Diverse Subpopulations in Cohort Study Data.” In: Computer-Based Medical Systems (CBMS). 2017, pp. 582-587. DOI: 10.1109/CBMS.2017.15.

Epidemiologists search for significant relationships between risk factors and a target variable in large and heterogeneous datasets that encompass participant health information gathered from questionnaires and medical examinations [80]. The previous chapter describes an expert-driven workflow that can help epidemiologists automatically detect such relationships in the form of classification rules, which are descriptions of risk factors and predictive value ranges for a specific subpopulation and an target variable of interest. However, rule induction algorithms often produce large and overlapping rule sets requiring the expert to manually pick the most informative rules and remove less informative or redundant ones. This post-filtering step is time-consuming and tedious.

This chapter presents a clustering-based algorithm that hierarchically reorganizes large rule sets and summarizes all essential subpopulations while maintaining distinctiveness between the clusters. For each cluster, a representative rule is shown to the expert who then can drill down to other cluster members. We evaluate our algorithm on two subsets of SHIP where the target variables hepatic steatosis and goiter serve as target variables, respectively. Further, we report on the effectiveness of our algorithm, and we present selected subpopulations.

We propose SD-Clu, an approach that combines subgroup discovery with clustering to return \(k\) representative classification rules. Building upon a set of potentially highly overlapping rules generated by an SD algorithm, we leverage hierarchical agglomerative clustering to find groups of rules that cover different sets of instances. For each cluster, we nominate one rule as the group’s representative that exhibits best tradeoff between rule confidence and coverage towards the target variable. We define the similarity between a pair of subgroups based on the fraction of mutually covered instances and individually covered instances. Rules covering (almost) the same instances are likely to be condensed into the same cluster and thus more likely to be represented by a proxy rule.

Section 4.1 serves as motivation for the related field of subgroup discovery and redundancy of classification rules. Section 4.2 presents our SD-Clu algorithm that generates distinct rules. Section 4.3 describes the experimental setup. In Section 4.4, we report on our evaluation results. In Section 4.5, we discuss our findings regarding hepatic steatosis and goiter on the SHIP data. In Section 4.6, we summarize our contributions.

4.1 Motivation and Comparison to Related Work

Subgroup discovery (SD) algorithms aim to uncover “interesting” relationships between one or more conditions (variables and value ranges) and a target variable in the form of classification rules [110], [120]. Compared to more accurate but predominantly opaque black-box models such as neural networks, support vector machines, or random forests, SD algorithms yield more interpretable results, making them highly suitable for domain expert-guided subpopulation discovery. SD algorithms have been used in several medical studies where descriptive knowledge needed to be inferred, e.g., to extract potential drug targets from multi-relational data sources for the treatment of dementia [121], to identify predictive auditory-perceptual, speech-acoustic, and articulatory-kinematic features of preschool children with speech sound disorders [122], and to discover discriminative features in patient subpopulations with different admission times to psychiatric emergency departments [123].

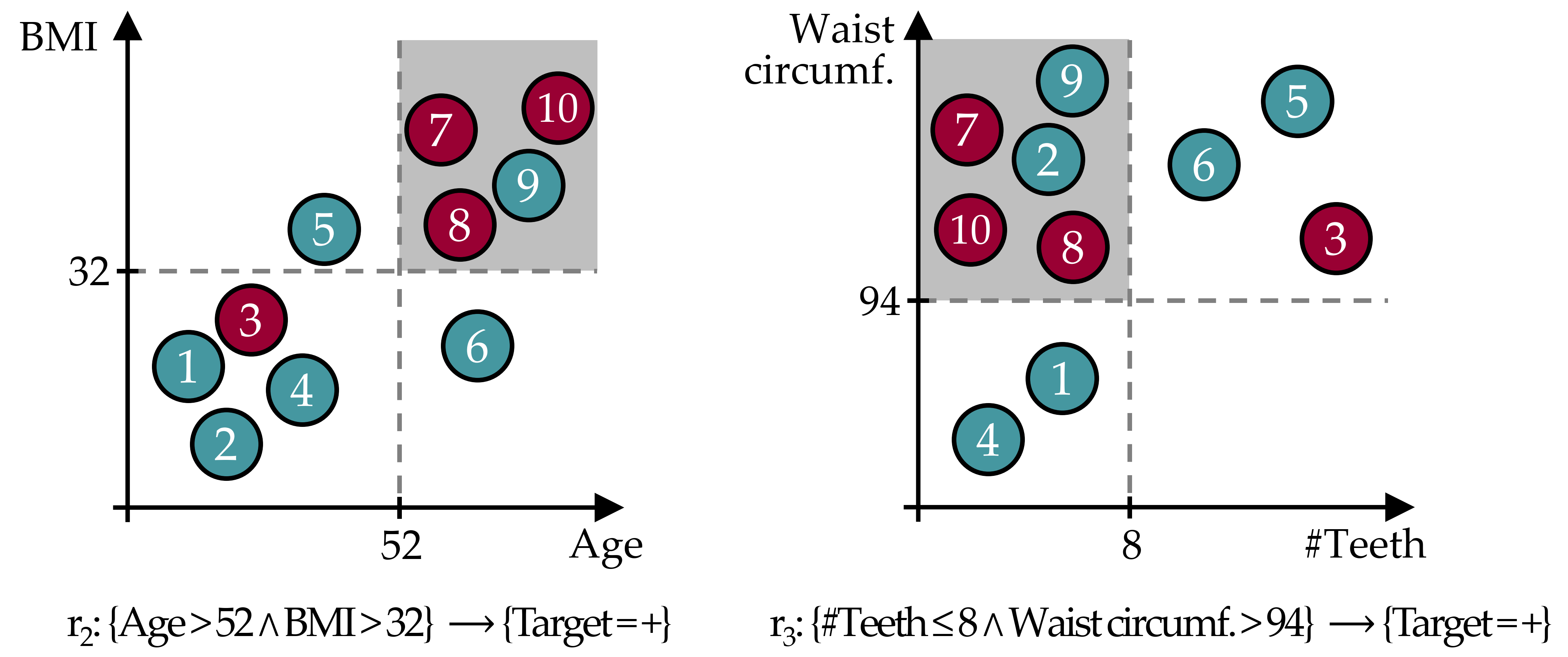

However, SD methods often yield large sets of rules that domain experts are not willing to tediously go through and manually separate interesting from irrelevant or redundant ones. A common observation is that there are groups of rules that cover almost the same set of instances, as shown in Figure 4.1. Instead of presenting all rules found by an SD algorithm at once, we propose to organize rule sets hierarchically so that the domain expert can explore a compact set of different subpopulations, equipped with mechanisms to drill down to specific rules of interest.

Figure 4.1: Example of redundant rules. Both \(r_2\) and \(r_3\) cover the instances 7,8,9, and 10; \(r_3\) additionally covers instance 2. The cover set overlap is due to the high correlation between #Teeth and Age; and between BMI and Waist circumference. Both rules describe the same subpopulation, i.e., elderly overweight people are at higher risk of developing the disease.

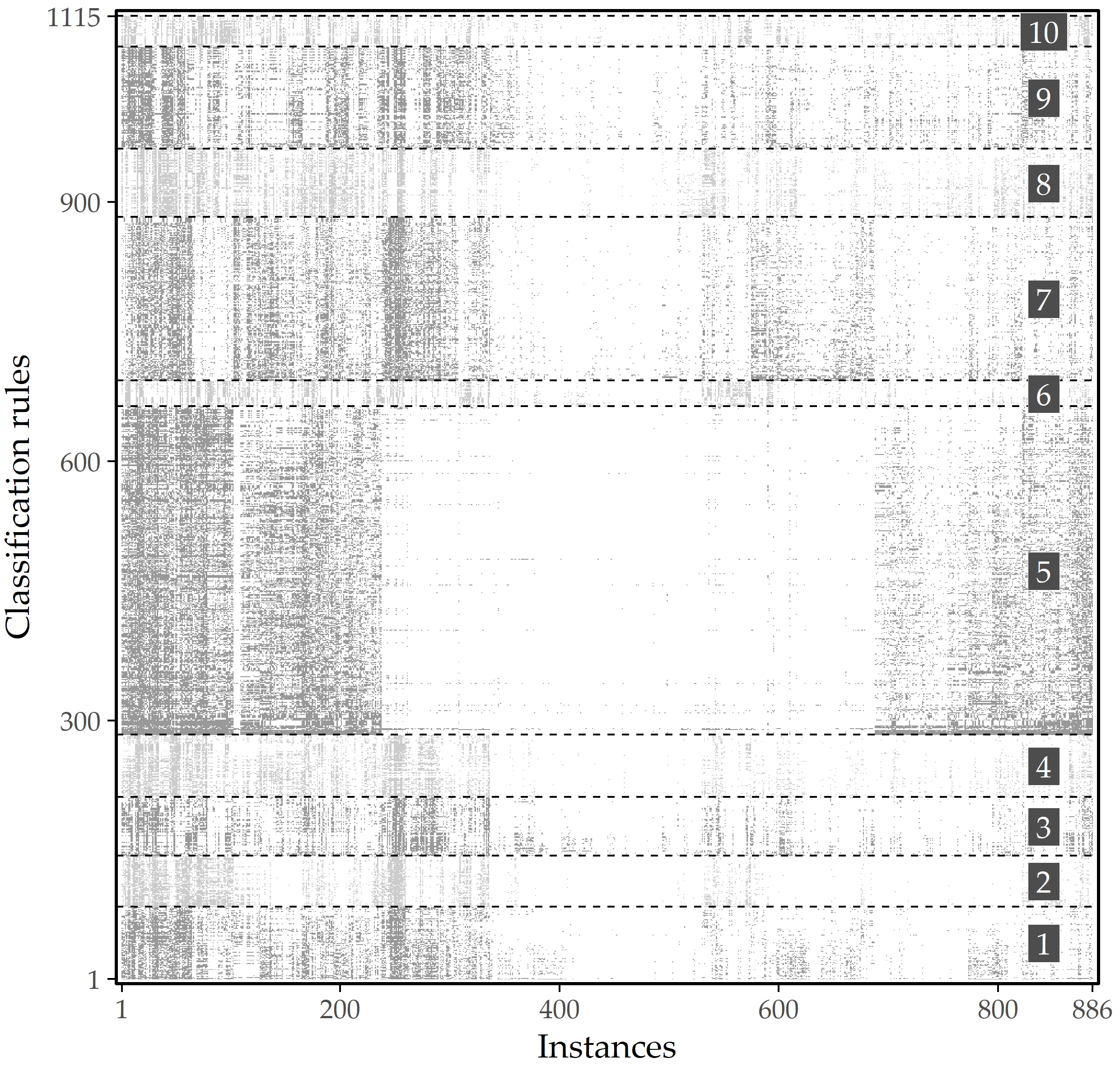

Similar to the HotSpot algorithm described in Section 3.2.3, popular SD algorithms such as SubgroupMiner [124], SD [125], and CN2-SD [126] use a fixed beamwidth [109] to limit the number of further expanded subgroup candidates at each iteration. A post-pruning step can be applied to reduce the size of the rule set – e.g., to return the top k rules – using a quality criterion such as the Weighted Relative Accuracy [127] or the p-value of a statistical test. Even when both beamwidth search and top k pruning are applied, the result often still contains redundant rules. This is due to the correlation between the (non-target) variables, which leads to a large number of variations of a given finding, cf. Figure 4.2 for an illustrative example. In particular, top k pruning leads to different variations of the same subpopulation (i.e., high instance overlap among rules) [128].

Figure 4.2: Illustration of rule redundancy. Graphical representation of 1115 HotSpot rules found on SHIP data on 886 labeled instances. A gray cell indicates that the instance at that x-position is covered by the rule at the associated y-position. Instances and rules are sorted by agglomerative hierarchical clustering. When partitioning into 10 clusters based on the covered instances, each cluster’s rules describe similar subpopulations.

Unlike SD algorithms which generate multiple rules that overlap in terms of their coverage sets, predictive rule learning algorithms such as CN2 [129] or RIPPER [130] are designed to generate rules that capture different subpopulations. They work iteratively according to a divide-and-conquer strategy [109] as follows: First, a rule that maximizes the algorithm’s quality function is generated on the set of instances not yet covered. Second, all covered instances are removed from the training set. This process of rule induction and removal of instances from the training set is repeated until all instances are covered by at least one rule. The output of this algorithm is often a decision list. To classify an instance, the prediction of the first rule that covers the instance is used. While these algorithms avoid rule redundancy, important subpopulations may remain undiscovered because of order dependencies. For example, the algorithm might induce a rule that includes instances with a high BMI, but it might not find a slightly weaker association with income because those instances are immediately removed from the instance candidate space. Also, the coverage of rules generally decreases with each iteration. Rules with low coverage are negligible in epidemiological studies because they may represent spurious correlations of the study sample.

Instead of merely eliminating covered instances from rule induction of subsequent iterations, weighted covering approaches consider how many times instances have been covered so far in the rule candidate expansion step [126]. While this leniency in removing instances allows for a larger number of rules, a new hyperparameter is introduced to control the tradeoff between reusing already covered instances and minimum rule confidence. This parameter is unintuitive and difficult to set, especially for domain experts. Moreover, both traditional predictive rule learning algorithms and their weighted covering extensions introduce order dependencies between rules: a rule depends on all previous rules in the rule list and the instances of the target variable it covers. It may not make sense to interpret a single rule.

4.2 Finding Distinct Classification Rules

This section presents our algorithm SD-Clu, which combines subgroup discovery with clustering to return a set of \(k\) distinct classification rules. The algorithm consists of three main steps. First, the SD algorithm generates a set of (potentially highly overlapping) rules. Using hierarchical agglomerative clustering, this set of rules is then grouped into a distinct rule clusters covering different sets of instances. For each cluster, the rule with the best tradeoff between confidence and coverage of the target variable is appointed as the representative of the group. Rules covering the same instances are grouped in the same cluster and are therefore represented by the same proxy rule.

4.2.1 Rule Clustering and the Concept of “Proxy Rules”

We use the same notation for classification rule discovery as in Section 3.2.1. Agglomerative hierarchical clustering iteratively merges clusters of similar instances in a bottom-up way. Here, the instances are rules. Hence an alternative definition of a distance measure is required. The order of merging two clusters depends on the linkage strategy. In complete linkage, the distance between two clusters is defined as the maximum distance between any two of their instances. The pair of clusters minimizing this maximum distance is selected for merging.

We define rule similarity for clustering on the basis of the mutually covered instances as an adaption of the Sørensen–DICE coefficient [131]. The distance between two rules \(r_1\), \(r_2\) with corresponding subpopulations (cover sets) \(s(r_1)\), \(s(r_2)\) is given as \[\begin{equation} \text{dist}(r_1,r_2) = 1 - \frac{2\cdot\left|s(r_1)\cap s(r_2)\right|}{\left|s(r_1)\right| + \left|s(r_2)\right|}. \tag{4.1} \end{equation}\]

We propose two ways of completing the hierarchical rule clustering process:

- Specification of the maximum number of clusters \(k\)

- Discovery of the optimal \(k\) based on an internal clustering index such as the Silhouette coefficient.

For a clustering \(\xi\) and a set of rules \(R\), the Silhouette coefficient is calculated as \[\begin{equation} \text{Silh}(R,\xi) = \frac{1}{|R|}\sum_{r\in R}{\frac{b(r)-a(r)}{\max\left\{a(r), b(r)\right\}}} \tag{4.2} \end{equation}\] where \[\begin{equation} a(r)=\frac{\sum_{y\in{}Y}\text{dist}(r,y)}{|Y|-1} \tag{4.3} \end{equation}\] is the average dissimilarity between \(r\) and the other rules in the cluster \(Y\in\xi\) which contains \(r\), while \[\begin{equation} b(r)=\frac{\sum_{y\in{}Z}\text{dist}(r,y)}{|Z|} \tag{4.4} \end{equation}\] is the average dissimilarity between \(r\) and the rules in the cluster \(Z\in\xi\) which is the closest to the cluster \(Y\) containing \(r\). Basically, any other cluster quality function could be used. We choose the Silhouette coefficient here because of its understandable interpretation. The value of the silhouette coefficient can vary between -1 and 1. A negative value is undesirable because it represents a case where the average distance to rules in the same cluster is greater than the minimum average distance to rules in another cluster. We prefer a positive silhouette coefficient, i.e., a value close to the maximum of 1.

Then, we traverse the dendrogram bottom-up, compute the Silhouette for each set of clusters \(\xi\), and select as \(\xi_{opt}\) the set of clusters with the best Silhouette value. The optimal number of clusters is then the cardinality \(|\xi_{opt}|\). Finally, we map each cluster \(Y\in\xi_{opt}\) to a representative rule. To do so, we invoke the rule interestingness measure Weighted Relative Accuracy [127] (WRA hereafter), which balances coverage and confidence gain and is defined as \[\begin{equation} WRA(r)=Cov(r)\cdot\left(Conf(r)-\frac{n_{T=v}}{N}\right) \tag{4.5} \end{equation}\] where \(N\) is the total number of instances in the dataset and \(n_{T=v}\) is the number of instances with the target variable value of interest. We compute WRA for each rule \(r\in{Y}\) and select as cluster proxy \(cp(Y)\) the rule for which WRA is maximum.

4.2.2 Representativeness of a Set of Proxy Rules

The proxy rules should be a good representation of the total rule set. Thus, each instance should be covered equally often by the cluster proxies compared to the total rule set. Hence, we define representativeness as the difference between the average fraction of proxy rules the instances are covered by and the total average fraction of rules the instances are covered by. If the difference between the two ratios is small, then the proxy rules’ representativeness is high.

Typically, the set of rule clusters \(\zeta\) for a set of rules \(R\) will be the optimal set of clusters, as described in the previous subsection, but it can be any set of clusters chosen by the user, as long as it contains all rules in \(R\). For \(\zeta\), let \(R_{\zeta}=\{cp(Y)|Y\in\zeta\}\) denote the set of proxy rules. To quantify how representative such a set of rules is, we proceed as follows. First, let \(U\subseteq{}R\) be an arbitrary subset of the complete set of rules, and let \(x\) be a data instance. The coverage rate of \(x\) towards \(U\) is calculated as \[\begin{equation} covRate(x,U) = \frac{\sum_{r\in{}U}isCovered(x,r)}{|U|} \tag{4.6} \end{equation}\] where \(isCovered(x,r)\) is equal to 1, if \(r\) covers \(x\), i.e., \(x\in s(r)\), and \(0\) otherwise. We observe that for a set of rules \(U\), an instance \(x\) cannot be covered by more than \(|U|\) rules. Let \(R_x\) be the set of rules that cover instance \(x\), i.e., for \(R_x=\{r\in{}U | isCovered(x,r)=1 \}\). For the whole set of instances \(X\), we create bins: \[\begin{equation} bin_i(U)= \{x \in{}X | |R_x|=i \}. \tag{4.7} \end{equation}\] Further, let \(bin_0(U)= \{x \in{}X | \forall r\in U : isCovered(x,r)=0 \}\). An instance \(x\) can be covered by \(0, 1, \ldots, |U|\) rules, i.e., \(covRate(x,U)\) can take one of \(|U|+1\) values. In contrast, \(covRate(x,R)\) can take one of \(|R|+1\) values, a usually much larger number. Therefore, we map the possible values of \(covRate(x,R)\) into the much smaller set of possible values by rounding, computing: \[\begin{equation} adjCovRate(x,U, R)=\frac{\lfloor covRate(x,R)\cdot|U| \rceil}{|U|} \tag{4.8} \end{equation}\] where \(\lfloor\rceil\) is the rounding operator. Then, for the complete set of instances \(X\), a set of induced rules \(R\), the clustering \(\zeta\) over \(R\) and the set of proxy rules \(R_{\zeta}\), the \(representativeness\) of \(R_{\zeta}\) is defined as \[\begin{equation} representativeness(R_{\zeta},R)=1- \frac{1}{|X|}\sum_{x\in{}X} |adjCovRate(x,U,R) - covRate(x,R_{\zeta})|. \tag{4.9} \end{equation}\]

We investigate how the \(representativeness\) changes with an increasing number of proxy rules \(k\) for different criteria to select the cluster’s proxy rule. Ideally, \(representativeness\) is high while \(k\) is low. In other words, the number of proxy rules should be as small as possible, but these rules should cover all important subpopulations within the data. For a fixed \(k\), we compare our strategy of selecting the cluster’s rule with the highest WRA as the proxy rule against three baselines: the top \(k\) rules according to (i) WRA, (ii) odds ratio, and (iii) coverage.

4.3 Experimental Setup

Datasets.

To evaluate our method, we used data from the SHIP study.

For a description of SHIP, see Section 2.2.1.

We considered hepatic steatosis (see Section 3.2.5) and goiter as target variables.

For the sample HepStea, we derived a binary target variable by discretizing the liver fat concentration obtained from the MRI report, such that study participants with a concentration no greater than 10% were assigned to the negative class, and values greater than 10% were assigned to the positive class indicating the presence of the disease.

Of 886 participants for whom the MRI report from SHIP-2 was available at that time, 694 (78.3%) were negative, and 192 (21.7%) were positive.

We considered 99 variables selected exclusively from SHIP-0 to assess their long-term effects expressed ten years later in SHIP-2.

For the Goiter sample, the target variable was derived by thyroid ultrasound.

The presence of goiter was defined for a thyroid volume greater than 18 ml in women and 25 ml in men [132].

Of the 4400 participants for whom the target variable is available in TREND-0, 3010 belong to the negative class (68.4%) and 1390 (31.6%) to the positive class.

Apart from the target variable, we use a total of 182 variables that were pre-selected by a medical expert as potential risk factors.

SD algorithms. For subgroup discovery, we use our approach from Chapter 3, the algorithm HotSpot [133] (Section 3.2.3). We further try SD-Map [134], an exhaustive algorithm that adapts the popular FP-Growth association rule learning method [90]. Rules that fall below a minimum coverage threshold are pruned. A depth-first search is performed for candidate generation. Rules are ranked according to a user-defined quality function. We use the implementation from the VIKAMINE framework [135]. The implementation of SD-Map only supports categorical variables. Therefore, each numeric variable is discretized using the minimum description length based approach of Fayyad and Irani [136].

For SD-Map, we set the minimum coverage threshold to 0.05 to avoid overfitting, too small rules. We use WRA as a quality function and define a minimum threshold of 0.025. For HotSpot, we set the support threshold of a rule to be above 0.05. The beamwidth is set to 500. Furthermore, to avoid rather meaningless literals, we restrict the extension of a rule body with another literal to a relative confidence gain of at least 0.3. To avoid having many overly specific rules that cover only a small number of study participants, we limit the length of a rule body, i.e., the number of literals to 3. To determine the optimal number of proxy rules \(k\), we compute the Silhouette coefficient for every possible number of clusters.

4.4 Results

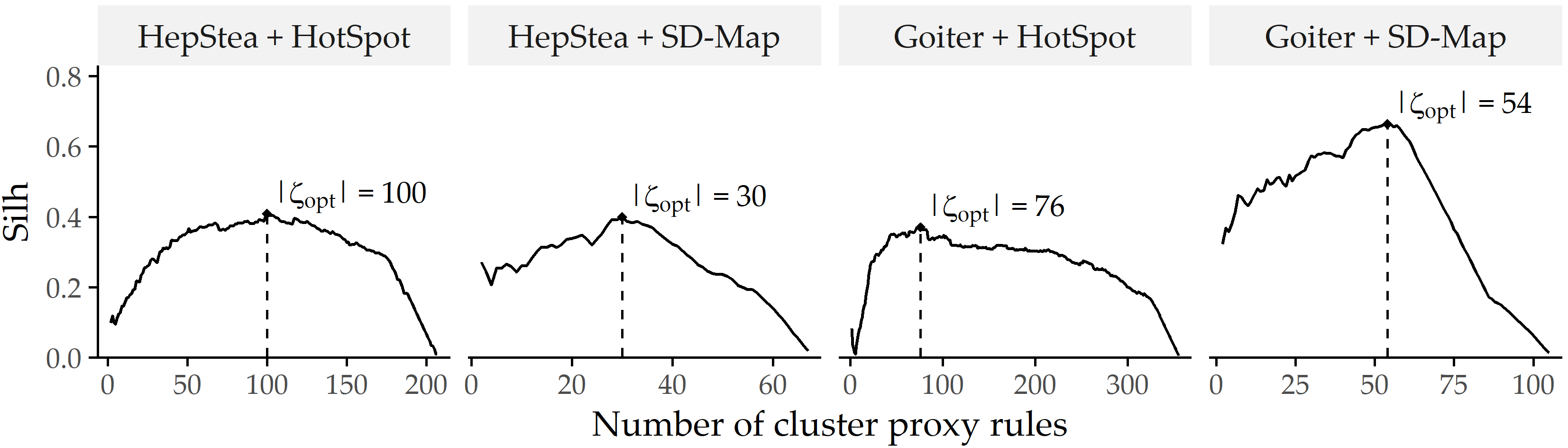

Figure 4.3 shows the optimal number of clusters for each study sample combination and SD algorithm.

Table 4.1 lists the optimal \(k\) and the ratio of proxy rules vs. the total number of rules.

For example, clustering with optimal Silhouette coefficient for the algorithm HotSpot on Goiter has 76 clusters and thus 76 proxy rules (cf. Table 4.1), which is only 21.3% of the total number of rules.

Thus, if only the cluster proxies are initially displayed to the expert, the time needed to check the rules is reduced by 78.7%.

Figure 4.3: Silhouette coefficients (\(Silh\)) of SD-Clu using complete linkage for each combination of dataset and algorithm. The number of clusters \(k\) with the highest \(Silh\) score (\(|\zeta_{opt}|\)) is indicated by a dashed vertical line.

| Dataset | Algorithm | \(|R|\) | \(Silh(\zeta_{opt})\) | \(|\zeta_{opt}|\) | \(\frac{|\zeta_{opt}|}{|R|} (\%)\) |

|---|---|---|---|---|---|

| HepStea | HotSpot | 208 | 0.41 | 100 | 48.1 |

| HepStea | SD-Map | 68 | 0.40 | 30 | 44.1 |

| Goiter | HotSpot | 356 | 0.37 | 76 | 21.3 |

| Goiter | SD-Map | 106 | 0.66 | 54 | 50.9 |

The optimal number of clusters \(k\) of \(|\zeta_{opt}|\) shown in Figure 4.3 could be used as a suggestion, but the expert is free to specify the number of rules they wish to obtain.

For example, if the expert considers \(|\zeta_{opt}|\) = 100 too large for HotSpot on HepStea, the diagram would show them a reduction to \(|\zeta_{opt}|\) = 58 is possible, reducing \(Silh\) only slightly from 0.48 to 0.37.

Also in the other direction: if \(|\zeta_{opt}|\) is relatively low, the diagram shows that a small increase does not change \(Silh\) much; therefore, the added rules may also be important.

The expert could even analyze the diagram to derive a range instead of a single value, e.g., the range where \(Silh\) is above 90% of its maximum.

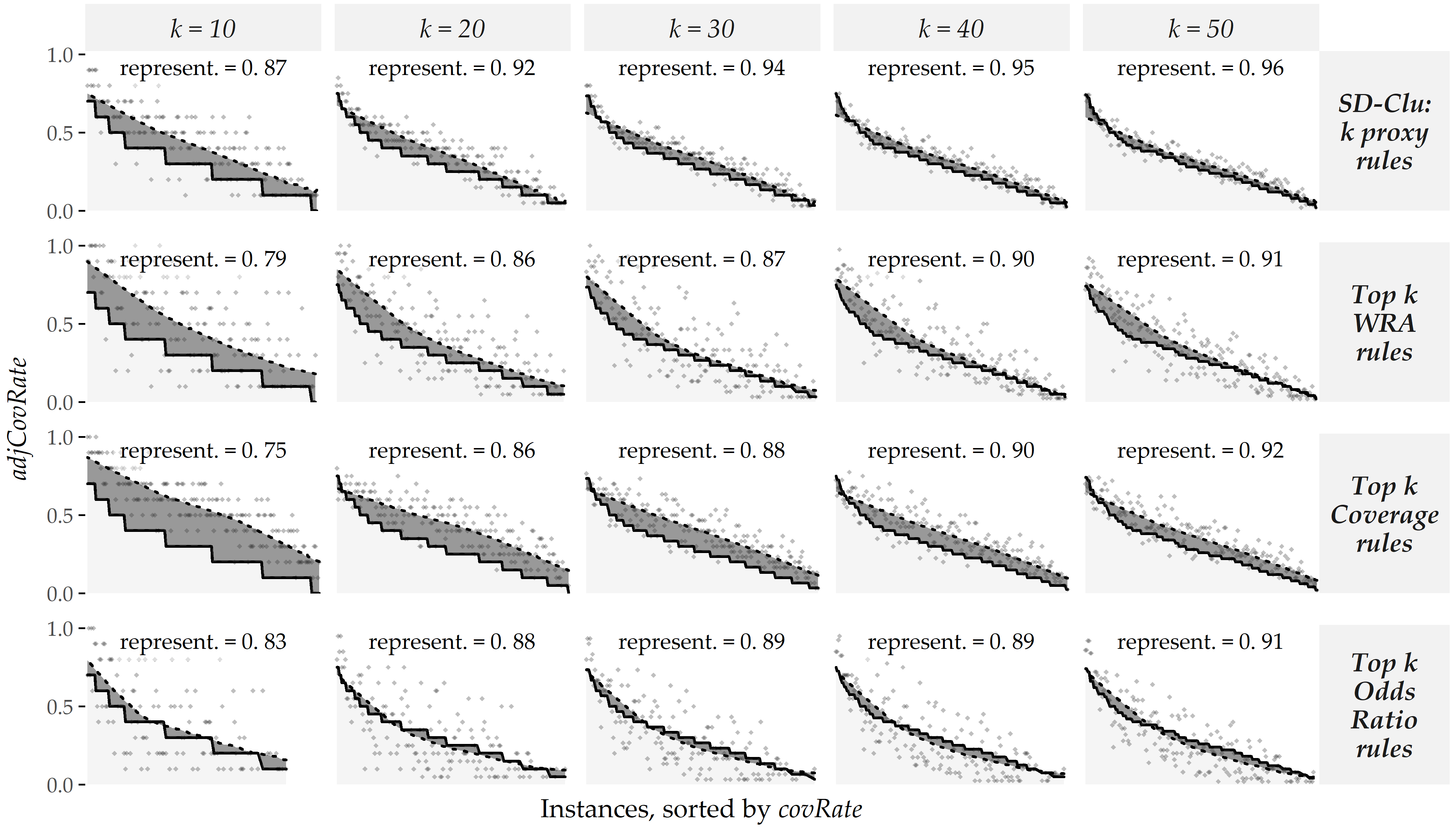

To assess the representativeness of the cluster proxies, we compare them with three baseline criteria that return the top \(k\) rules according to odds ratio (baseline 1), coverage (baseline 2), and WRA (baseline 3).

Figure 4.4 juxtaposes the representativeness of SD-Clu and the three baselines for different numbers of rules \(k\) returned to the expert for the HotSpot algorithm on the sample HepStea.

The plot matrix’ panels are arranged by rule selection method (rows), and the number of representative rules \(k\) returned to the expert (columns).

Each graph shows the \(adjCovRate\) (y-axis) of instances (x-axis) for \(R\) (solid black curve).

The instances are sorted by the number of rules in \(R\) they are covered by, with their respective \(covRate\) shown as dots.

The dotted curve represents a locally weighted scatterplot smoothing (LOWESS) [137] of the points.

Ideally, both curves are close to each other, meaning that the instances are covered by cluster proxy rules approximately by the same proportion as all rules cover them.

Figure 4.4: Evaluation of representativeness on HepStea using the HotSpot algorithm. \(representativeness\) of SD-Clu and three baseline approaches for different numbers of clusters \(k\) on the HepStea sample using HotSpot. Points depict an instance’s \(adjCovRate\) for the set of representative rules of the approach (row) and \(k\) (column). Instances are sorted by \(covRate\) with respect to \(R\), i.e., the set of all rules) in descending order, shown by a solid black curve. A dotted curve depicts a LOWESS regression fit on the points. The similarity between solid and dotted curves illustrates \(representativeness\) of the top \(k\) rules of the respective approach, illustrated by the dark gray area in-between solid curve and dotted curves. Smaller areas are better and reflect higher \(representativeness\) values.

For all approaches, \(representativeness\) improves as \(k\) increases. For example, when we increase \(k\) from 10 to 50, \(representativeness\) increases from 0.87 to 0.96 for SD-Clu, which means that the absolute difference between \(adjCovRate\) of \(\zeta\) and \(covRate\) of \(R\) over all instances successively decreases. Further, for a given \(k\), the representative rules of the baselines are less representative than SD-Clu’s proxy rules, e.g., 0.91, 0.92, and 0.91 vs. 0.96 for \(k=50\), respectively (cf. 5th column of plot matrix in Figure 4.4).

4.5 Discussion of Findings

Tables 4.2 and 4.3 show the antecedent, support, and confidence of proxy rules found by two algorithms for HepStea and Goiter with \(k\)=5.

The prevalence of hepatic steatosis or goiter is significantly higher in each of the subpopulations described by these rules than in the corresponding overall population.

These subpopulations are characterized by known risk factors for hepatic steatosis, such as large waist circumference and BMI, blood pressure and hypertension, advanced age, and high values in some medical tests (ALAT and LDL).

Furthermore, apolipoprotein A1 (ApoA1), a major protein component of high-density lipoprotein (HDL) particles in plasma, is associated with target variable in elderly patients (see fourth hotspot rule in Table 4.2).

Lipoprotein metabolism is considered the main process contributing to the development of fatty liver [138].

Besides, Poynard et al. [139] found that patients with hepatic steatosis had higher levels of ApoA1 than patients with hepatic fibrosis, who in turn had higher levels than patients with cirrhosis.

The fifth HotSpot rule describes a subpopulation with elevated levels of liver high-sensitivity C-reactive protein (CRP) (approximately 0.80-quantile) and elevated levels of uric acid (approximately 0.36-quantile).

Lizardi-Cervera et al. [140] reported increased ultra-sensitive CRP levels in subjects with hepatic steatosis independent of other metabolic states.

Similarly, Keenan et al. [141] found elevated uric acid levels in patients with hepatic steatosis independent of metabolic syndrome.

| # | Antecedent of proxy rule | Sup | Conf | Lift |

|---|---|---|---|---|

| HotSpot | ||||

| 1 | increased waist circumference = TRUE | 0.72 | 0.37 | 1.69 |

| 2 | hypertension = TRUE | 0.60 | 0.33 | 1.54 |

| 3 | age > 44 \(\wedge\) apolipoprotein A1 \(\leq\) 1.56 g/l | 0.37 | 0.41 | 1.90 |

| 4 | physical health score \(\leq\) 47.3 \(\wedge\) increased waist circumference = TRUE | 0.29 | 0.48 | 2.12 |

| 5 | high-sensitivity C-reactive protein > 2.8 mg/l \(\wedge\) uric acid > 246 μmol/l | 0.29 | 0.46 | 2.21 |

| SD-Map | ||||

| 1 | diastolic blood pressure > 79.75 mmHg \(\wedge\) hip circumference > 98.05 cm \(\wedge\) cholesterol-HDL-quotient > 3.015 | 0.67 | 0.40 | 1.85 |

| 2 | increased waist circumference = TRUE \(\wedge\) diastolic blood pressure > 79.75 mmHg \(\wedge\) body mass index > 26.3 kg/m² | 0.58 | 0.45 | 2.07 |

| 3 | waist circumference > 88.15 cm \(\wedge\) diastolic blood pressure > 79.75 mmHg \(\wedge\) body mass index > 26.3 kg/m² | 0.59 | 0.46 | 2.11 |

| 4 | body mass index > 26.3 kg/m² \(\wedge\) alanin-aminotransferase > 0.385 μkatal/l \(\wedge\) diastolic blood pressure > 79.75 mmHg | 0.55 | 0.48 | 2.20 |

| 5 | body mass index > 26.3 kg/m² \(\wedge\) uric acid > 278.5 μmol/l \(\wedge\) alanin-aminotransferase > 0.385 μkatal/l \(\wedge\) treated urinary tract diseases = TRUE | 0.54 | 0.46 | 2.34 |

Similarly, the identified subpopulations for goiter (see Table 4.3) are characterized by common risk factors, such as increased weight, body mass index and angiotensin II receptor blocker intake (see second HotSpot rule). Furthermore, the first HotSpot rule describes participants with intima-media thickness greater than 0.73 mm (approximately 0.80-quantile). Previous studies found associations between intima-media thickness and thyroid-stimulating hormone [142] as well as subclinical hypothyroidism [143], [144]. The condition of the third HotSpot rule describes the duration of an ECG phase. Jabbar et al. [145] summarized that pathological thyroid hormone levels increase the risk of cardiovascular disease. This association appears to be especially true in the elderly [146]. The fourth rule suggests that certain thrombocyte levels indicate increased thyroid volume, which confirms Erikci et al. [147], who found that hypothyroid patients had higher platelet volume and platelet distribution width than a control group. This shows that our approach delivers relevant results to the application field; here two chronic reversible conditions.

| # | Antecedent of proxy rule | Sup | Conf | Lift |

|---|---|---|---|---|

| HotSpot | ||||

| 1 | intima-media thickness > 0.73 mm | 0.27 | 0.43 | 1.34 |

| 2 | intake of angiotensin II receptor blocker = TRUE | 0.10 | 0.62 | 1.90 |

| 3 | duration of QRS complex on ECQ > 114 ms \(\wedge\) discouraged and sad mood = “never” \(\wedge\) body mass index > 26.65 kg/m² | 0.06 | 0.87 | 2.68 |

| 4 | education level = 8 \(\wedge\) thrombocytes < 209 Gpt/l | 0.11 | 0.62 | 1.92 |

| 5 | aorta descendens thickness > 2.79 mm \(\wedge\) thyroid-stimulating hormone \(\leq\) 1.05 mU/l \(\wedge\) hemoglobin > 8.8 mmol/l | 0.06 | 0.88 | 2.73 |

| SD-Map | ||||

| 1 | intima media thickness > 0.55 mm \(\wedge\) proton pump inhibitors intake = FALSE \(\wedge\) age > 38.5 | 0.68 | 0.44 | 1.34 |

| 2 | duration of ECG P wave > 111 ms \(\wedge\) age > 38.5 \(\wedge\) proton pump inhibitors intake = FALSE | 0.60 | 0.45 | 1.38 |

| 3 | hip circumference > 98.75 cm \(\wedge\) age > 38.5 \(\wedge\) proton pump inhibitors intake = FALSE | 0.60 | 0.44 | 1.34 |

| 4 | increased waist circumference = TRUE \(\wedge\) age > 38.5 \(\wedge\) proton pump inhibitors intake = FALSE | 0.59 | 0.44 | 1.34 |

| 5 | increased waist circumference = TRUE \(\wedge\) intima media thickness > 0.55 mm \(\wedge\) proton pump inhibitors intake = FALSE | 0.51 | 0.46 | 1.41 |

4.6 Conclusion

We have proposed SD-Clu, an algorithm for rule clustering, identifying cluster-representative rules (“proxy rules”), and minimizing the proxy rules shown to the domain expert. Our algorithm tackles the problem of high instance overlap in sets of rules generated by subgroup discovery algorithms. By limiting the number of rules, time spent for rule inspection is reduced. SD-Clu nominates a representative rule from a hierarchical clustering from a large set of rules and thus returns rules that express distinct subpopulations, i.e., rules that cover different sets of instances. The introduced representativeness measure assesses whether instances are similarly often covered by representatives as by the total rule set. SD-Clu was evaluated on two samples from an epidemiological study where an optimal set of proxy rules was selected that (i) contains considerably fewer rules than the total rule set and (ii) is more representative compared to the baseline approaches, respectively. SD-Clu can not only be applied for subpopulation discovery in epidemiological data but high-dimensional medical datasets in general. In the absence of a concrete target variable, unsupervised methods for detecting subpopulations (or phenotypes) are needed. Furthermore, more sophisticated visualizations are needed if subpopulations are characterized by many variables rather than just a few. We present our solution to these challenges in the following chapter.